Accounting for Bias

Key Ideas

Generative AI can perpetuate biases from its training data, and it can be difficult to account for user biases in AI outputs, especially when the user is evaluating those outputs. Taking generative AI outputs at face value amplifies this challenge.

Existing scholarship acknowledges bias in AI-generated content and its implications (e.g., Robinson & Hollett, 2024; Su & Yang, 2023). Less attention has been paid to strategies for intervening in these biases, especially biases that originate with the user rather than the tool.

Because human errors and biases are inevitable, be it in the training data or in human inputs to chatbots, humans play an important role in ensuring the quality and accuracy of AI-generated outputs (Markauskaite et al., 2022; Robinson & Hollett, 2024).

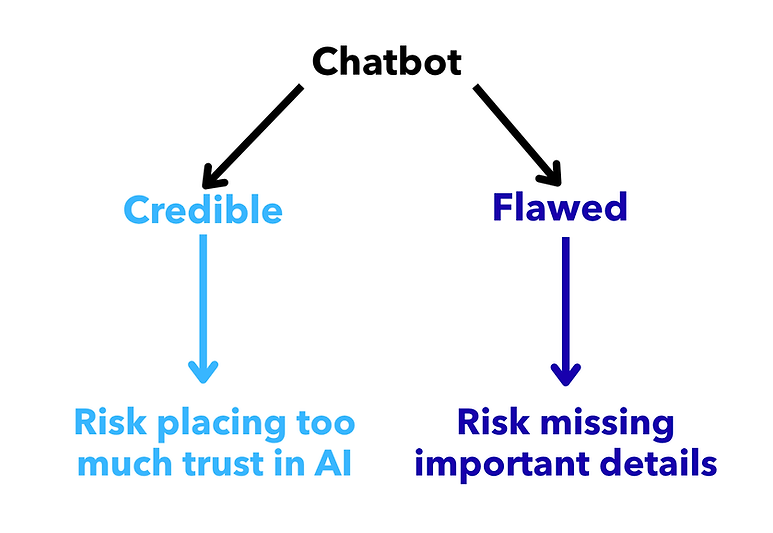

It is just as necessary to look at how user perceptions of AI will impact their use of these technologies because they influence both human trust and expectations. Learners and teachers have preconceived notions of AI that influence their use of the technology (Van Poucke, 2024) and how they navigate biases.

If viewers see the chatbot as

So, what can we do?

AI and new innovations like neurotechnologies will become increasingly embedded into knowledge-making practices (even more than they already are. As such, Sarah Elaine Eaton (2023) called for pedagogies and institutional shifts that reconsider how knowledge is created and the ethics that guide knowledge making. Bias cannot be eliminated, but it can be mitigated.

Diversity fine-tuning is one potential avenue to explore. This is where LLMs are trained with diverse datasets to make them more equitable and better able to respond to a range of requests (Elsharif et al., 2025). Research in these techniques is still in its infancy, so more needs to be done to explore what systemic biases the chatbot has and what biases the user may be bringing to it.

“The question of how we prepare people for an AI world is fundamentally a question of values” (Markauskaite et al., 2022, p. 9).

The question of values is at the heart of using generative AI. This creates shared space for dialogue between stakeholders (e.g., learners, teachers) to co-develop AI policies, an approach advocated by Higgs and Stornaiuolo (2024) and Vetter et al. (2024). Starting from common understanding, creating policy together, and having instructors and learners assess these policies collectively would establish a solid foundation that accounts for AI's place and application in pedagogies. By extension, it becomes easier to help learners account for biases in AI outputs in ways that respond to disciplinary ways of knowing and doing.

Remember

Conversations between stakeholders, particularly between teachers and learners, can help build policies that respond to current pedagogical contexts and help learners navigate biases that exist in AI outputs.

Biases do not manifest only from the chatbot's training data. AI users bring their own baises to AI interactions that show up in subtle ways: in the prompts they create, in the way they evaluate outputs, and in the ways they revise those outputs. Accounting for both systematic and user-generated biases is important to using generative AI well.